By Florencia Edgina

A version of this blog was originally published on the Data Values Digest.

Future Advocacy is working with the Global Partnership for Sustainable Development Data on the ‘Power of Data’, which is one of the UN’s 12 High Impact Initiatives that launched in New York last September. The initiative aims to drive investment in strong data systems to revolutionize decision making, accelerate inclusive digital transformation agendas and open up new economic opportunities. This article is a contribution to the Data Values Project, a campaign led by the Global Partnership.

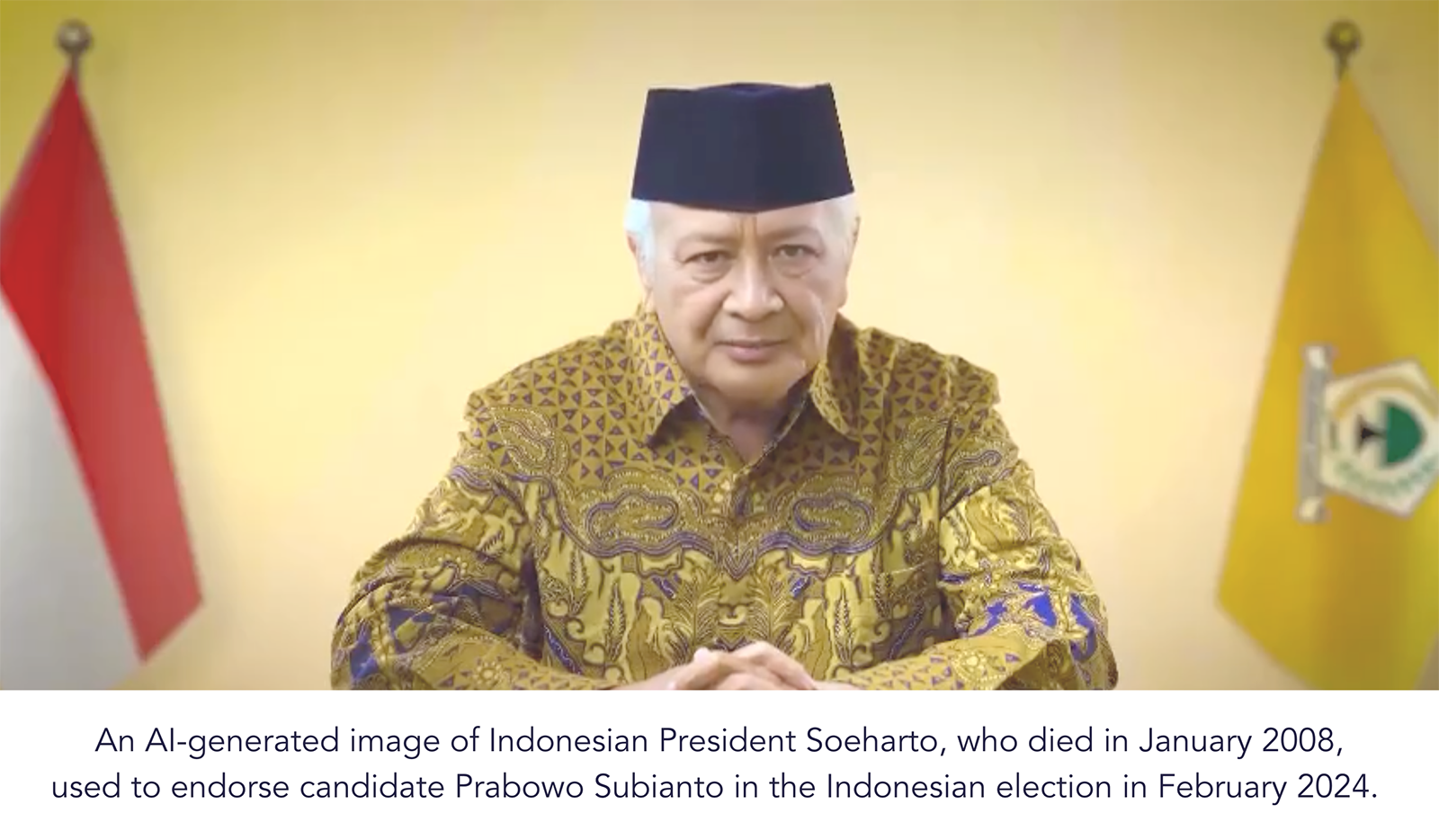

It had been 16 years since I’d heard the voice of Indonesia’s second president, live on TV. But that changed last month when a deepfake video of President Soeharto surfaced.

Mr Soeharto, the leader of a 32-year-long regime in Indonesia with a controversial human rights record once again became a trending topic amongst Indonesian youth, all thanks to the power of Artificial Intelligence (AI).

The AI-generated video of him endorsing candidate Prabowo Subianto in the Indonesian election this February has racked up more than 4.7 million views on X and even more on TikTok and YouTube.

Indonesians are no strangers to these kinds of hoaxes, which reached record highs in the last presidential election in 2019. But this election is special.

The large percentage of youth voters in Indonesia (52 percent) accompanied by unprecedented developments in AI in the past year has made Indonesia a fascinating example of a highly contested election in an era of a technological watershed, dubbed by the media as ‘deepfake electioneering’. The video of Soeharto was only one of many AI-generated campaign images and videos circulating at the time, forcing the Indonesian Ministry of Communications and Informatics to issue guidelines on the ethics and dangers of AI misuse in the election.

Indonesia and its largest single-day election in the world might be an extreme example but we are far from the exception. Journalists, election watchers, and even tech companies are desperately trying to win the race of debunking AI-generated disinformation in political campaigning around the world.

South Korea is imposing a 90-day ban on deepfake campaign materials to prepare for their upcoming legislative election in April. In the UK, MPs have called for more regulation on deepfakes ahead of 2024 polling day. US lawmakers have gone even further, introducing legislation to combat AI deepfakes and misinformation in at least 14 states in 2024.

This year, as the largest ever number of people head to the polls, there are two key questions at the forefront of our minds: How will deepfake and AI-generated content impact our democracies? And how can the principles in the Data Values Manifesto help to limit their impact?

What is a deepfake?

To answer these questions we must first understand deepfakes better. A deepfake is a manipulated synthesis of audio, video, or other forms of digital content. Its main difference from more basic ‘photoshopped’ images or videos is the use of new AI techniques.

The term itself comes from the idea that the technology used to create these ‘fakes’ involves ‘deep learning techniques’, a subset of machine learning. Deep learning methods allow computers to process data in a way that is inspired by the human brain. They are able to recognize complex patterns in audiovisual materials (pictures, text, sounds, etc.) and then replicate them in a newly generated product.

Deepfakes cause widespread harm

As a campaigner working on online safety issues, I have learnt a lot about the potential impact of deepfakes on people’s lives. Deepfakes take our biggest concerns over data privacy and cyber security breaches and present them in high definition. They take agency away from the people being misrepresented, and create a culture of confusion and doubt.

At Future Advocacy, we studied the perceived negative impact of deepfakes on elections, particularly on misinformation and public distrust. In 2019, we created two AI-doctored videos with UK artist Bill Posters showing the two election contenders, Boris Johnson and Jeremy Corbyn, endorsing each other for Prime Minister. The videos went viral online.

Our study showed how deepfakes can promote misinformation such as false endorsement, which can rise to the level of defamation. Using the image of a public figure, deepfakes limit the public’s ability to work out what are reliable sources of information and to distinguish real from fake news.

This prevents countries from executing one of the fundamental elements of democracy: holding free and fair elections.

Publicly accurate information is essential to allow citizens to make evidence-driven decisions during elections and actively participate in the democratic process. Research shows that people who have high trust in politics and governments are more likely to turn out to vote. If false information dominates in critical times such as an election, public trust can be weakened and democratic participation can be affected.

Over the last two years, we have started to witness real-life manifestations of deepfakes’ harmful impact. In the US, a doctored voice of Joe Biden urged New Hampshire Democrats not to vote in the presidential primaries. In Slovakia, a fake AI audio recording of the leader of the progressive party plotting to rig the election went viral during the country’s electoral process, with the candidate finally losing in September last year.

What has been done to stop them?

Most efforts to stop deepfakes are centered around detection technologies that can automatically detect false facial features or unique elements in manipulated digital content.

But the current detectors are inadequate.

Research shows that databases used to train automated detectors lack diversity and can be strongly biased against certain ethnic groups and genders. Detection technology is far from transparent and does not allow us to look inside the process of how it categorizes something as a deepfake or not. It’s also inaccurate, as videos in real life can be manipulated to pass around the detectors’ binary grading scale of real or fake.

Once a deepfake goes online, it can be shared globally and becomes borderless. This makes it challenging to regulate the issue. Government legislation often has limited geographical jurisdiction and despite the appetite in the tech community to address this gap, protecting everyone’s online security should be our collective responsibility.

How data values can help us

This is where initiatives like the Data Values Project come in. The project is a global policy and advocacy campaign aiming to unlock the value of data for all, by developing common positions on data ethics, rights and governance issues.It aims to challenge power structures in data to ensure that we all share in the benefits of its collection and use.

By bringing together diverse and underrepresented perspectives, the project developed the Data Values Manifesto which has five key pillars. These data values can help us be clear-eyed about the actions needed to minimize the negative impact and risks of deepfakes: increasing public participation, strengthening data skills, and improving data transparency.

- Better public participation is a key factor in reducing the potency of deepfakes and holding their creators accountable. People whose lives are affected by the misleading information deepfakes pedal must be included in the decision-making processes to regulate them. Investing in public participation will mean that efforts to curb deepfakes are more likely to be citizen-centered and meaningfully protect people from harm.

- Investing in people’s data and AI skills is one way to rein in the impact of deepfakes. One study showed that people correctly identify deepfakes in 50 percent of cases. While deepfakes are becoming increasingly sophisticated, they often contain a set of flaws that can help people spot the signs. These skills need to be democratized so that everyone – including those in groups more vulnerable to deepfakes – can develop the skills to identify misinformation and disregard it, focusing instead on facts and evidence.

- Creating cultures of transparency and public trust is essential in the era of growing AI-manipulated content. Some countries are exploring legislation requiring all AI-generated content to be clearly labeled. While this isn’t a magic bullet that would tackle deepfakes entirely, it could go some way to improving transparency and help build back the public’s trust in the information they are consuming. Civil society can also help to minimize public misinformation from deepfakes by raising awareness of non-consensual data usage and fact-checking.

Deepfakes are developing at an alarming rate and our response must get better, and get better fast.

Maintaining a robust democracy means holding the government and government candidates accountable and this is only possible through trustworthy, data accountable data practices.

With at least four billion people going to the polls this year, let’s make sure we vote to tackle deepfakes, promote data transparency and restore trust in our democracies.